On October 21, 2016 at approximately 4am PST, the internet broke. OK, we know the internet doesn’t “break.” But hundreds of important services powering our modern web infrastructure had outages – all stemming from a DDoS targeting Dyn, one of the largest DNS providers on the internet.

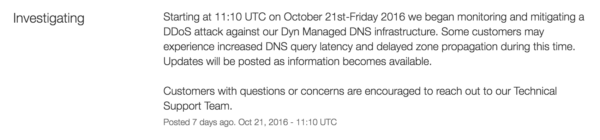

Here is the initial status notification Dyn customers received that morning:

This was the first of 11 status notifications that would follow. As the hours passed, the incident amplified. We’re all pretty used to the idea of using and building web services that depend on uptime from many other web services. It helps us work, build, and share information faster than ever before. But with this efficiency comes a vulnerability: sometimes a really important domino, like Dyn, in your stack tips over. As more and more cloud services became unavailable, the domino effect ensued, causing one of the largest internet outages in recent history. The sheer scale of the incident set this day apart from past cyber attacks.

We never want to see our customers go through a day like Friday. But, in reality, it’s the reason we exist as a product – to give companies like Dyn a clear communication path to customers in the worst of times. And the truth is that downtime is not unique to Dyn. It’s inevitable for all services. Downtime also doesn’t need to be caused by a massive DDoS attack, but can be triggered by a one-line-of-code bug in production. So, as the internet recovers from the outage, let’s figure out how as an industry we can learn from the incident. On our end, we’ll be working on better ways for companies to identify issues from third party service providers and building the right tools to keep end users informed even during a cloud crisis. It’s what drives us to come to work every day.

Thousands of the top cloud services in tech including Dropbox, Twilio, and Squarespace use Statuspage to keep millions of end users informed during incidents like this. Let’s look back on Friday, when the dominos began to fall and we found ourselves watching 1 million notifications firing through the system.

The domino effect

How did Dyn handle the incident?

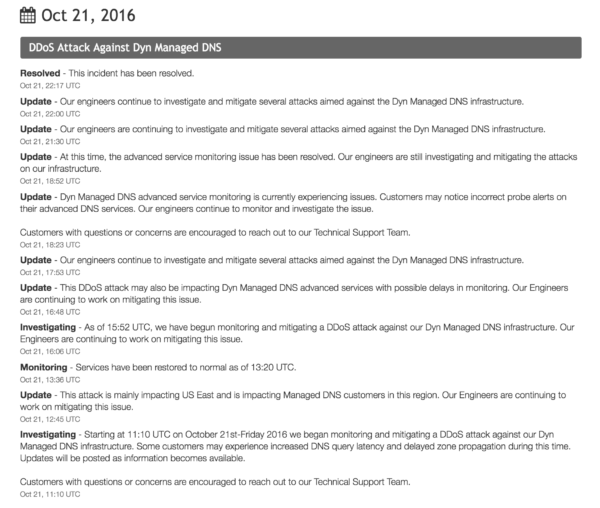

We want to give some major kudos to the teams at Dyn who worked around the clock to get things up and running again. They did a killer job of communicating clearly and regularly through their status page even in crisis mode. News outlets like the New York Times were even able to link to Dyn’s status page and quote their status updates during the incident.

Let’s take a closer look:

- Total number of updates: 11

- Average time between updates: 61 minutes

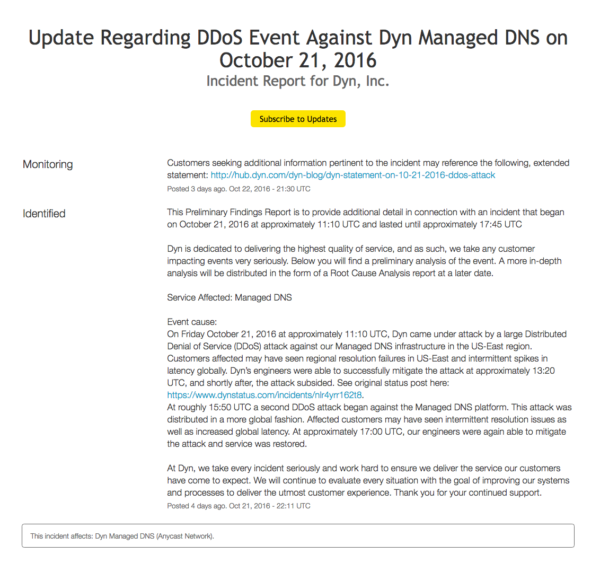

Dyn also posted an incident report the following day and provided a link to a retrospective blog post that gives readers more information about what happened, how their teams responded, and how grateful they were to the community that came together to help resolve the issue. The frequency and transparency of Dyn’s communication allowed users to stay informed even during a time of great stress and uncertainty.

In the end, it was a tough day for Dyn and hundreds of other service providers, but the open and honest communication during the incident helped to build trust and transparency within their community.

Thank you for the frequent updates on the DDoS attacks. Good luck.

— Matt Fagala (@mfagala) October 21, 2016

unfortunate first time for everything #Dyn. There are no better #DNS co’s then Dyn & #IoT is frightening no DNS could have done better.

— Thomas Zickell (@thomaszickell) October 24, 2016

Creating your incident response plan

There is no time like the present – especially while incidents are top of mind for many people – to create an incident response plan or hone your existing one. There doesn’t have to be a major DDoS attack for it to come in handy (think bugs in production, website issues, login issues, etc.). No matter what the problem is, you’ll never regret some good ole fashioned preparation. Here are a few questions to think through ahead of time.

Define what constitutes an incident:

- How many customers are impacted?

- How long does the incident need to last?

- How degraded is the service and how do you determine severity level?

Create an incident communication plan:

- How do you identify the incident commander?

- Who owns the communication plan?

- How will you communicate with users? What channel(s) will be used for different severity levels?

- Do you have templates for common issues you can pull from to accelerate the time from detection to communication?

- How will you follow-up post-incident and work to avoid similar incidents in the future? Are you prepared for a post-incident review? At what point do you need to write a postmortem?

While we hope there is never a next time, we know incidents similar to this are an inevitable part of our cloud world. Having a vetted plan in place before an incident happens and a dedicated way to communicate with your customers – whether that’s through a status page or other dedicated channels – will let your team focus on fixing the issue at hand all while building customer trust. If you do choose Statuspage, we pledge to be there for you so you can be there for your customers.